Solving the asp-append-version problem with remote files in aspnet core with a custom TagHelper

I recently came across an issue where the site wasn’t refreshing images that the customer has updated even though they had changed them. Obviously browser caching was the original thought – which it was.

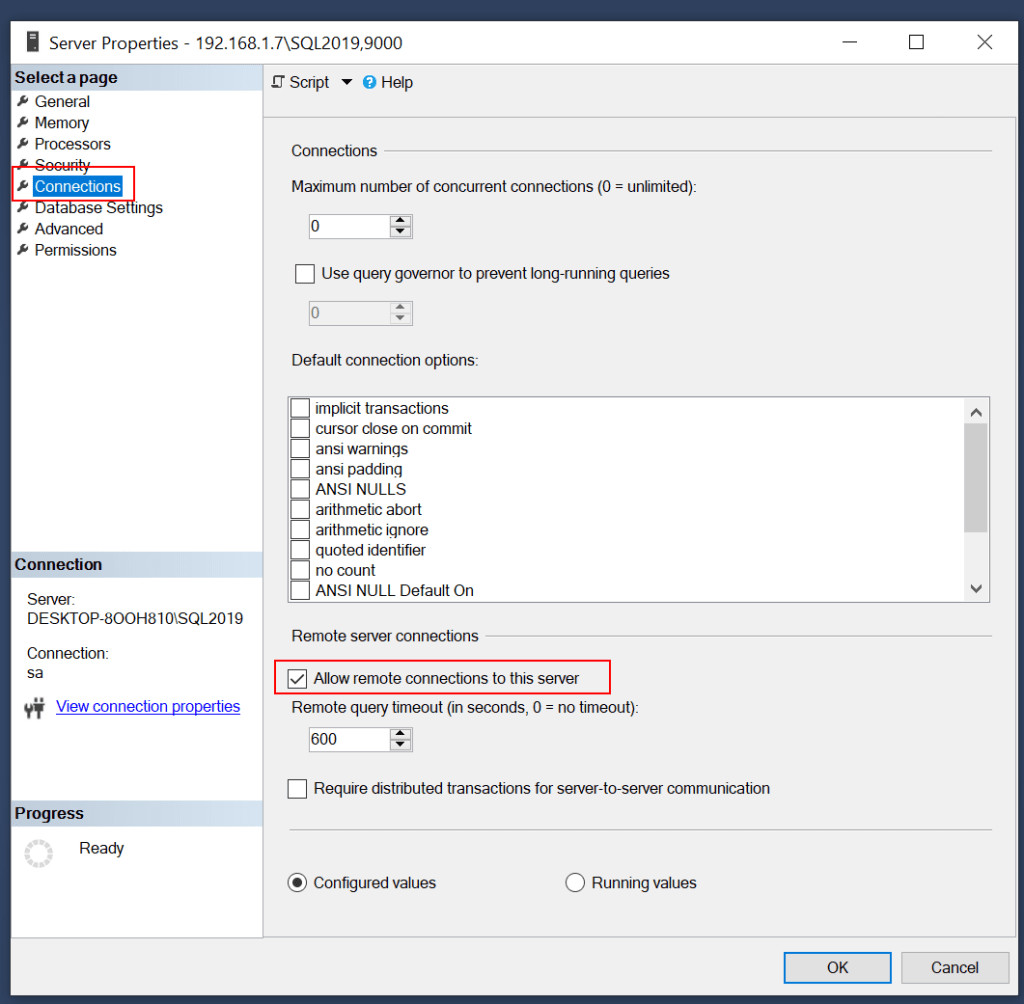

Some background, the is hosted on Azure and provided a back end portal for customers (B2B) to be able to order products via the website, these in turn are then pushed to the customers Microsoft Dynamics NAV instance (also known now as Microsoft Dynamics 365 Business Central). This has been developed my my Consultancy company in the UK – TAIG Solutions

In this instance the product images are actually stored seperately to the Azure site – they are hosted on the customers own on-prem server this makes it easier for the designers to update the images, they can just overwrite the image with a new one…. and here lies the problem…

One of the tools we have available is the asp-append-version tag which, when applied to the img tag basically adds a hash value of the file onto the end of the url, so for example

<img src="yourdomain.com/image.png?v=1234567890" />

The ?v=1234567890 being key, normally each time the file is served a hash is generated based on the file, so if the image changes, so does the hash and the browser will force a refresh of the image and not use the cached image.

However, this doesn’t work with files that are stored remotely, as we found out. There are a couple of solutions to this problem, but the easier we chose was to use the same versioning, but generate our own hash value – but not based on the file, we’d use the date (in this case they change the images so often we decided to do it on a day-by-day basis, but you could do something not as regular).

So how do we do this, we create our own TagHelper of course.

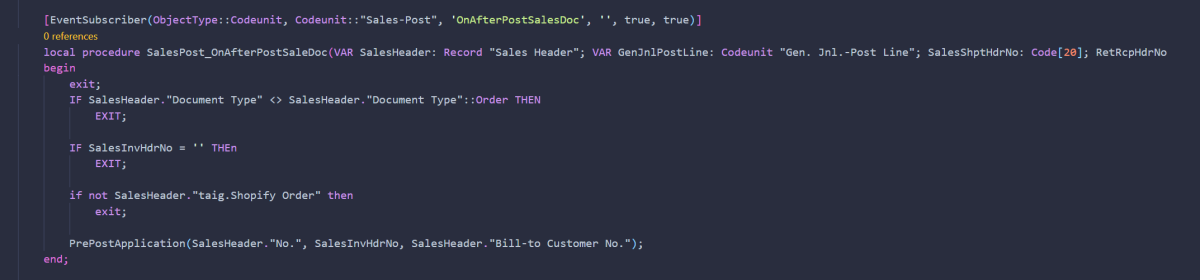

In your project, create a new class with the following code:

using Microsoft.AspNetCore.Razor.TagHelpers;

using System;

namespace TAIG.Solutions.WebPortal.TagHelpers

{

[HtmlTargetElement("static-image-file", TagStructure = TagStructure.WithoutEndTag)]

public class StaticImageTagHelper : TagHelper

{

// Can be passed via <static-image-file image-src="..." />.

// PascalCase gets translated into kebab-case.

public string ImageSrc { get; set; }

public override void Process(TagHelperContext context, TagHelperOutput output)

{

output.TagName = "img"; // Replaces <static-image-file> with <a> tag

// create a version

string version = DateTime.Now.ToString("yyyyMMdd"); // caching for a day - could use a setting in future?

// generate url

string url = $"{ImageSrc}?v={version}";

output.Attributes.SetAttribute("src", url);

}

}

}

Now, in your _ViewImports.cshtml file we need to add the following

@addTagHelper TAIG.Solutions.WebPortal.TagHelpers.StaticImageTagHelper, TAIG.Solutions.WebPortal

Obviously if you changes the class name you need to adjust it, and make sure you change the namespace to the one you are using.

The result now, is we can now use our own tag instead of image, so instead of

<img src="yourdomain.com/image.png?v=1234567890" />

We can now use

<static-image-file image-src="yourdomain.com/image.png" />

Now code code will add a ?v= along with a date value which changes each day.

Problem solved. Yes, if they change an image during the day then, if you have viewed the page already you would have to wait until the following day for it to change, but that is good enough for us.